Google Cloud Platform: Cloud Build trial and resources

Google Cloud Platform Cloud Build (formerly part of GCP Container Registry/Container Builder) is part of the GCP portfolio of developer…

Google Cloud Platform Cloud Build (formerly part of GCP Container Registry/Container Builder) is part of the GCP portfolio of developer tools: it integrates with GCP Source Repositories, Github and Bitbucket and deploys seamlessly to GCP Container Registry.

This article covers my experience of getting started with Cloud Build.

Background

My business infrastructure runs on Google Cloud Platform: the apps are written in Go, source code is stored in GCP Source Repositories and for operational environments I use a combination of Compute Engine and App Engine (Standard) resources, as well as supporting technologies (Cloud DNS, SDN, plus G-Suite for the main domain).

I’m in the process of breaking down a large application into smaller services. It’s the first of a set of iterations toward making the application Cloud Native compatible. I have some cooperating Go Grpc services which use different GCP resources and, probably like many developers, I’m investigating whether my stuff should eventually be hosted within Kubernetes.

Resources

There’s a lot of literature on how to get up and running with GKE (Quick-Start walkthroughs, YouTube videos from the Google team, Coursera materials and QwikLabs practice labs) but I was never clear on how I should go about building an app with dependencies, containerising it and deploying it to GKE. A good starting point was this YouTube video by Kelsey Hightower from Google Next 2017:

Here Kelsey runs through an example app which is built on Container Builder (as Cloud Build was named at the time) and deploys it to a Kubernetes cluster on GKE. Really useful stuff. Covers all the bases. Straightforward.

Scratch App

I wanted to begin with something which was indicative of real-world application complexity, but also simple enough to reveal the workings of Cloud Build. I started with a basic app with a twist. The app simply writes out a list of ten ID numbers (actually UIDs generated by the Segment.io KSUID Go package), but it does this by utilising packages from one of my partially decomposed Go Grpc services. This service is currently decoupled from the application monolith, but has not yet been sufficiently refactored to be considered as a microservice. So this simple app comes with baggage which, oddly enough, makes it perfect for the testing I wanted to perform, because there are lots of superfluous dependencies to include as build steps.

The local build process for the Go code was:

Check out code from GCP Source Repository

Run

go getfor required importsRun

go testto execute testsRun

go buildto produce the final binary

Additional steps would be required to containerise the app, but I’ll come to that later. For the purposes of this test I decided to replace go build with go install.

Setup

Firstly, I needed to enable the Cloud Container Builder API in my GCP project. Container Builder has been renamed to Cloud Build within the GCP web UI, but the API retains the original name. You would also need to enable the Cloud Source Repositories API if you plan to use that for your source code (I already use GCP Source Repositories so this had already been enabled in my case).

Then, referring to the Kelsey Hightower presentation, I needed to add two files to the scratch app source folder: cloudbuild.yaml and a Dockerfile.

cloudbuild.yaml

All of the heavy lifting occurs within the cloudbuild.yaml file. The file contains a sequence of steps which Cloud Build follows. Each step utilises a builder from a GCP- supplied set of builders (although it is possible to create custom builders).

I began with a minimal definition (which didn’t work, so maybe don’t run it.. I’ll work through my mistakes here):

steps: - name: "gcr.io/cloud-builders/git" env: ["GOPATH=/workspace"] args: ["clone", "https://source.developers.google.com/p/{PROJECT_ID}/r/{REPO}", "/workspace/src/{REPO}"] - name: "gcr.io/cloud-builders/go" args: ["install", "./src/{REPO}/scratch"] id: "go-install" - name: "gcr.io.cloud-builders/docker" args: ["build", "-t", "gcr.io/${PROJECT_ID}/scratch:0.0.1-dev", "./workspace/src/{REPO}/gcp/scratch"] id: "docker-build"images: ["gcr.io/${PROJECT_ID}/authserver:0.0.1-dev"]Each step is executed by a cloud-builder and each cloud-builder is a docker image, so the value for the name parameter is the full path to that image. Cloud Build does its work in a location which has the path /workspace and this location persists between steps. I have explicitly set GOPATH to the default workspace path in the env parameter. Any additional values which are to be supplied to the cloud builder go in the args parameter. Finally an optional id parameter is used to identify each step.

I used three different builders: gcr.io/cloud-builders/git; gcr.io/cloud-builders/go; and gcr.io/cloud-builders/docker.

The steps I defined were intended to do the following work:

Execute a git clone and put the GCP repo (GCP Source Repository URL format is shown above) into

/workspace/src/{REPO}Execute a go install to build and install the go code from

./src/{REPO}/scratch(where current directory is/workspace)Execute docker build to create the scratch image in GCP Container Registry (path shown above in the cloudbuild.yaml) with the scratch binary

Now, a number of things are wrong with this cloudbuild.yaml but for now let’s just punt, pray and see what happens!

First Attempt

The build is executed via gcloud which is the command line utility for interacting with GCP resources. I navigated to the directory containing my cloudbuild.yaml file and ran:

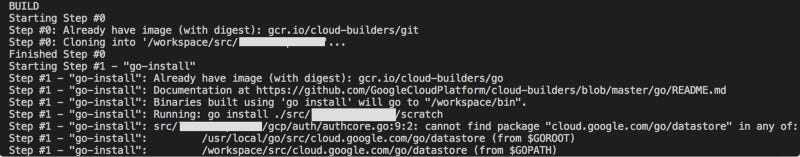

gcloud builds submit --config=cloudbuild.yamlAnd the result was:

We can see from this truncated output that step 0 (git clone) executed correctly. We can also see that in step 1, the go builder image will go install to /workspace/bin. That’s as far as it went: the go code did not compile because the packages which would normally be installed with go get were not included in the build definition. So I needed to add these packages.

Second Attempt

On github is a cloudbuild.yaml which contains example cloudbuild.yaml steps. I used this to add the go get build steps to my own cloudbuild.yaml. Shown beneath are two of the new build steps:

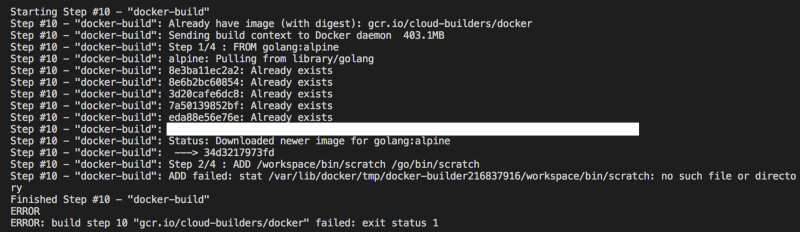

- name: "gcr.io/cloud-builders/go" args: ["get", "cloud.google.com/go/datastore"] - name: "gcr.io/cloud-builders/go" args: ["get", "github.com/segmentio/ksuid"]Then I re-ran the gcloud command. This time the go get commands and the git clone both ran correctly, but there was a problem with the docker build step:

The build step failed when attempting to add the binary to the docker image. So let’s take a look at the Dockerfile.

Third Attempt

The Dockerfile contained three lines:

FROM golang:alpine as builderADD /workspace/bin/scratch /go/bin/scratchENTRYPOINT [ "/go/bin/scratch" ]What threw me a little, was that the output from the first build attempt clearly stated that go install would output to /workspace/bin but the docker build step could not locate the scratch binary which should be in that location. However, I knew that Cloud Build used /workspace as the working path and that /workspace was the current build location, so I made one small change to the Dockerfile. I supplied a relative path as the first argument to ADD:

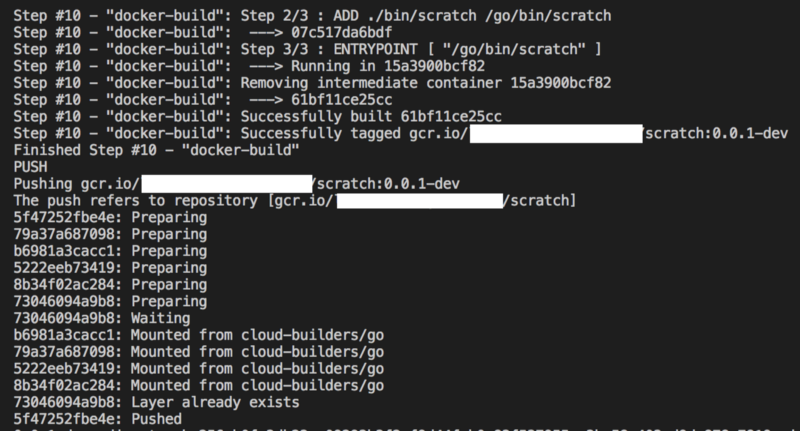

FROM golang:alpine as builderADD ./bin/scratch /go/bin/scratchENTRYPOINT [ "/go/bin/scratch" ]Then after I re-ran the gcloud command I got the following output:

Great. At this point the build definition worked, the cloud build completed successfully and the docker image was pushed to GCP Container Registry. Checking Container Registry in the GCP web UI confirmed this.

Docker

As a last step for this Cloud Build trial, I needed to prove that the image was valid, by pulling it down from GCP Container Registry (gcr.io) via docker, to see if the scratch binary worked as expected. So first I executed a docker pull.

docker pull gcr.io/{PROJECT_ID}/scratch:0.0.1-dev

Then I executed a docker run which executed the scratch binary:

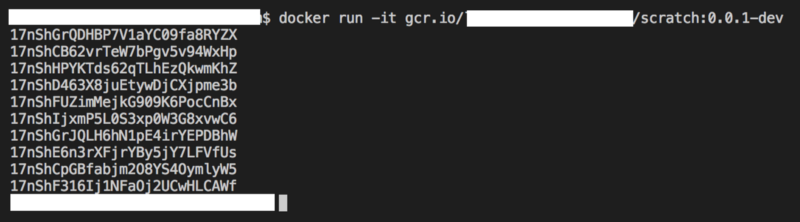

docker run -it gcr.io/{PROJECT_ID}/scratch:0.0.1-dev

The output showed ten KSUID values which were generated by the containerised scratch app. This was the expected output so the docker image was valid.

What Just Happened?

I started with a Go scratch app which was committed into GCP Source Repositories.

This app contained package imports from github.com, cloud.google.com and golang.org. The cloudbuild.yaml file was provided with go cloud builder steps to execute the

go getstatements needed to bring these package imports into the scope of the build.I added a git cloud builder step to clone my GCP source repo into the Cloud Build workspace.

I added another go cloud builder step to run a

go installto build and install the scratch app.Then I added a docker cloud builder step to copy the scratch app binary and build the image. GCP Cloud Build took care of deploying the image to GCP Container Registry.

Lastly, I pulled the docker image and ran it to verify that the scratch app had built and deployed correctly.

Some Recommendations

It is worth pointing out that the base image used in my Dockerfile expects that the binaries will be added to /go/bin. The binary will execute without issues if this path is used. If however, you need to put the binary in any other location (for whatever reason) then it is possible that you may see a ‘Permission denied’ message when you attempt to run the docker image. In this case, you will need an additional line in your Dockerfile to add the necessary execute permission on your binary:

FROM golang:alpineADD ./bin/scratch /go/bin/scratchRUN ["chmod", "+x", "/example/custom/path/scratch"]ENTRYPOINT [ "/example/custom/path/scratch" ]One other recommendation, before you begin building images, is to pull the base image down (in this case it was golang:alpine), shell into it with (for example)…

docker run -it golang:alpine

…and just take a look around. See what is preinstalled (software and directory structure), what is missing and what you might need to install additionally to support your image. It may also be worth building the first image with the Dockerfile entrypoint set to the shell, so that you can look around and see where your software has installed:

FROM golang:alpineADD ./bin/scratch /go/bin/scratchRUN ["chmod", "+x", "/example/custom/path/scratch"]ENTRYPOINT [ "/bin/sh" ]Bear in mind that if the entrypoint is not valid executable or the permissions are insufficient to run the executable, then the finalised image might be inaccessible.

Conclusion

This article covered the manual execution of a Cloud Build definition, but I have a host of things to do next:

Deconstruct/refactor the original services and create a cloudbuild.yaml for each repo.

Add the test build steps.

Parameterise the image name (using tags… see the Kelsey Hightower presentation for a demo on how that is done).

Add Build Triggers within GCP Cloud Build for these repos to automate the CI/CD pipeline (again see the Kelsey Hightower presentation for a demo of this).

Provision the GCP Kubernetes Engine cluster with appropriate GCP resource scopes (Storage, Datastore, Pub/Sub, CloudSQL proxy etc.)

Automate the deployment (there is also a

kubectlcloud builder).

I’ll follow up this article as I progress through the work.

Thanks for reading.